Introduction¶

Foreword¶

MetalK8s is a Kubernetes distribution with a number of add-ons selected for on-premises deployments, including pre-configured monitoring and alerting, self-healing system configuration, and more.

Installing a MetalK8s cluster can be broken down into the following steps:

Setup of the environment

Deployment of the Bootstrap node, the first machine in the cluster

Expansion of the cluster, orchestrated from the Bootstrap node

Post installation configuration steps and sanity checks

Warning

MetalK8s is not designed to handle world-distributed multi-site architectures. Instead, it provides a highly resilient cluster at the datacenter scale. To manage multiple sites, look into application-level solutions or alternatives from such Kubernetes community groups as the Multicluster SIG).

Choosing a Deployment Architecture¶

Before starting the installation, it’s best to choose an architecture.

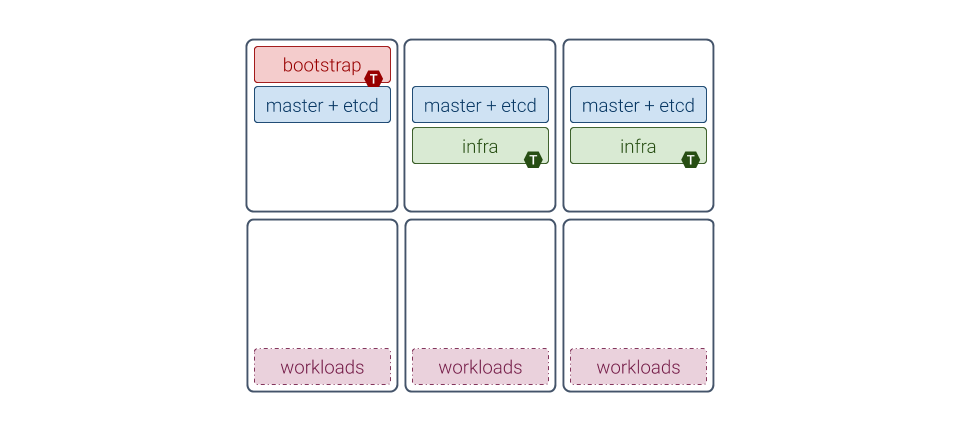

Standard Architecture¶

The recommended architecture when installing a small MetalK8s cluster emphasizes ease of installation, while providing high stability for scheduled workloads. This architecture includes:

One machine running Bootstrap and control plane services

Two other machines running control plane and infra services

Three more machines for workload applications

Machines dedicated to the control plane do not require many resources (see the sizing notes below), and can safely run as virtual machines. Running workloads on dedicated machines makes them simpler to size, as MetalK8s impact will be negligible.

Note

“Machines” may indicate bare-metal servers or VMs interchangeably.

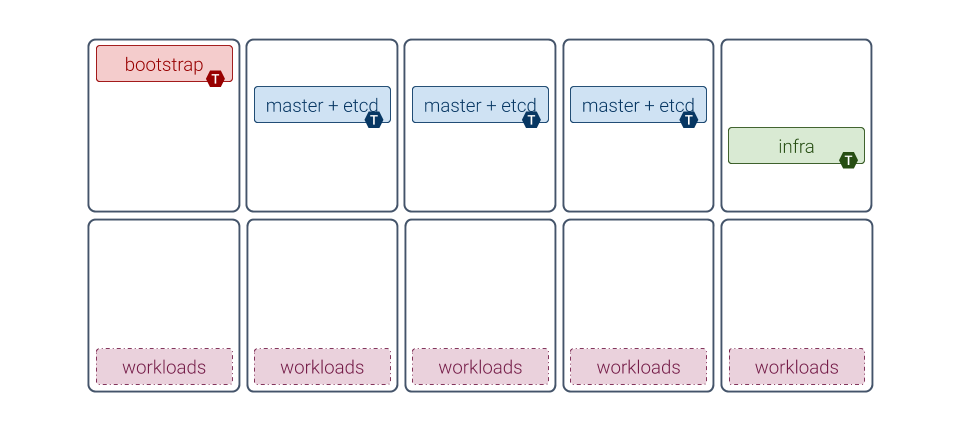

Extended Architecture¶

This example architecture focuses on reliability rather than compactness, offering the finest control over the entire platform:

One machine dedicated to running Bootstrap services (see the Bootstrap role definition below)

Three extra machines (or five if installing a really large cluster, e.g. > 100 nodes) for running the Kubernetes control plane (with core K8s services and the backing etcd DB)

One or more machines dedicated to running infra services (see the infra role)

Any number of machines dedicated to running applications, the number and sizing depending on the application (for instance, Zenko recommends three or more machines)

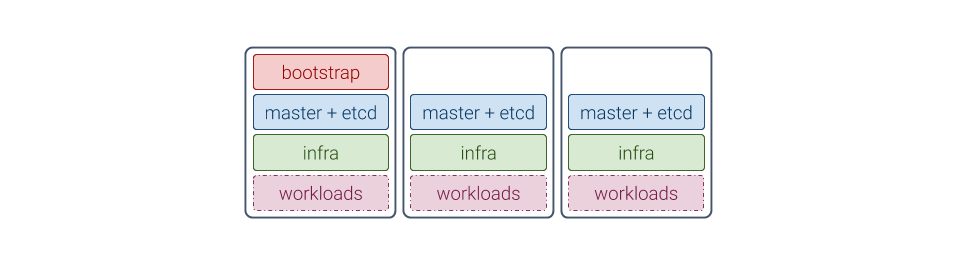

Compact Architectures¶

Although its design is not focused on having the smallest compute and memory footprints, MetalK8s can provide a fully functional single-node “cluster”. The bootstrap node can be configured to also allow running applications next to all other required services (see the section about taints below).

Because a single-node cluster has no resilience to machine or site failure, a three-machine cluster is the most compact recommended production architecture. This architecture includes:

Two machines running control plane services alongside infra and workload applications

One machine running bootstrap services and all other services

Note

Sizing for such compact clusters must account for the expected load. The exact impact of colocating an application with MetalK8s services must be evaluated by that application’s provider.

Variations¶

You can customize your architecture using combinations of roles and taints, described below, to adapt to the available infrastructure.

Generally, it is easier to monitor and operate well-isolated groups of machines in the cluster, where hardware issues only impact one group of services.

You can also evolve an architecture after initial deployment, if the underlying infrastructure also evolves (new machines can be added through the expansion mechanism, roles can be added or removed, etc.).

Concepts¶

Although familiarity with Kubernetes concepts is recommended, the necessary concepts to grasp before installing a MetalK8s cluster are presented here.

Nodes¶

Nodes are Kubernetes worker machines that allow running containers and can be managed by the cluster (see control plane services, next section).

Control and Workload Planes¶

The distinction between the control and workload planes is central to MetalK8s, and often referred to in other Kubernetes concepts.

The control plane is the set of machines (called “nodes”) and the services running there that make up the essential Kubernetes functionality for running containerized applications, managing declarative objects, and providing authentication/authorization to end users as well as services. The main components of a Kubernetes control plane are:

The workload plane is the set of nodes in which applications are deployed via Kubernetes objects, managed by services in the control plane.

Note

Nodes may belong to both planes, so that one can run applications alongside the control plane services.

Control plane nodes often are responsible for providing storage for API Server, by running etcd. This responsibility may be offloaded to other nodes from the workload plane (without the etcd taint).

Node Roles¶

A node’s responsibilities are determined using roles. Roles are stored in

Node manifests using labels of the form

node-role.kubernetes.io/<role-name>: ''.

MetalK8s uses five different roles, which may be combined freely:

node-role.kubernetes.io/masterThe master role marks a control plane member. Control plane services can only be scheduled on master nodes.

node-role.kubernetes.io/etcdThe etcd role marks a node running etcd for API Server storage.

node-role.kubernetes.io/infraThe infra role is specific to MetalK8s. It marks nodes where non-critical cluster services (monitoring stack, UIs, etc.) are running.

node-role.kubernetes.io/bootstrapThis marks the Bootstrap node. This node is unique in the cluster, and is solely responsible for the following services:

An RPM package repository used by cluster members

An OCI registry for Pod images

A Salt Master and its associated SaltAPI

In practice, this role is used in conjunction with the master and etcd roles for bootstrapping the control plane.

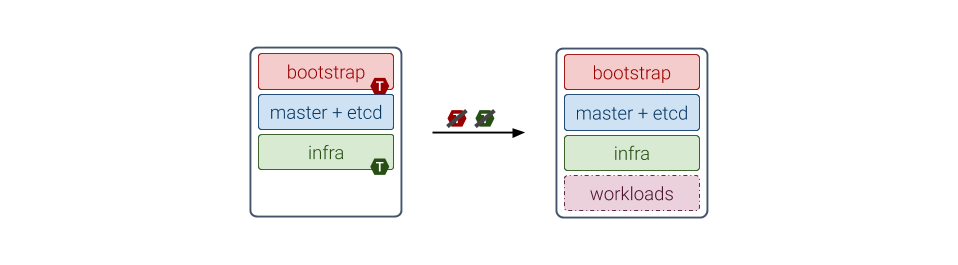

In the architecture diagrams presented

above, each box represents a role (with the node-role.kubernetes.io/ prefix

omitted).

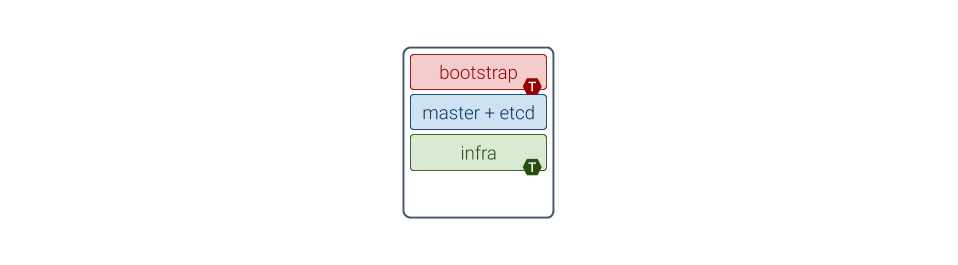

Node Taints¶

Taints are complementary to roles. When a taint or a set of taints is applied to a Node, only Pods with the corresponding tolerations can be scheduled on that Node.

Taints allow dedicating Nodes to specific use cases, such as running control plane services.

Refer to the architecture diagrams above for examples: each T marker on a role means the taint corresponding to this role has been applied on the Node.

Note that Pods from the control plane services (corresponding to master and etcd roles) have tolerations for the bootstrap and infra taints. This is because after bootstrapping the first Node, it will be configured as follows:

The taints applied are only tolerated by services deployed by MetalK8s. If the selected architecture requires workloads to run on the Bootstrap node, these taints must be removed.

To do this, use the following commands after deployment:

root@bootstrap $ kubectl taint nodes <bootstrap-node-name> \

node-role.kubernetes.io/bootstrap:NoSchedule-

root@bootstrap $ kubectl taint nodes <bootstrap-node-name> \

node-role.kubernetes.io/infra:NoSchedule-

Note

To get more in-depth information about taints and tolerations, see the official Kubernetes documentation.

Networks¶

A MetalK8s cluster requires a physical network for both the control plane and the workload plane Nodes. Although these may be the same network, the distinction will still be made in further references to these networks, and when referring to a Node IP address. Each Node in the cluster must belong to these two networks.

The control plane network enables cluster services to communicate with each other. The workload plane network exposes applications, including those in infra Nodes, to the outside world.

Todo

Reference Ingress

MetalK8s also enables configuring virtual networks for internal communication:

A network for Pods, defaulting to

10.233.0.0/16A network for Services, defaulting to

10.96.0.0/12

In case of conflicts with existing infrastructure, choose other ranges during Bootstrap configuration.

Additional Notes¶

Sizing¶

Sizing the machines in a MetalK8s cluster depends on the selected architecture and anticipated changes. Refer to the documentation of the applications planned to run in the deployed cluster before completing the sizing, as their needs will compete with the cluster’s.

Each role, describing a group of services, requires a certain amount of resources to run properly. If multiple roles are used on a single Node, these requirements add up.

Role |

Services |

CPU |

RAM |

Required Storage |

Recommended Storage |

|---|---|---|---|---|---|

bootstrap |

Package repositories, container registries, Salt master |

1 core |

2 GB |

Sufficient space for the product ISO archives |

|

etcd |

etcd database for the K8s API |

0.5 core |

1 GB |

1 GB for /var/lib/etcd |

|

master |

K8s API, scheduler, and controllers |

0.5 core |

1 GB |

||

infra |

Monitoring services, Ingress controllers |

0.5 core |

2 GB |

10 GB partition for Prometheus 1 GB partition for Alertmanager |

|

requirements common to any Node |

Salt minion, Kubelet |

0.2 core |

0.5 GB |

40 GB root partition |

100 GB or more for /var |

These numbers do not account for highly unstable workloads or other sources of unpredictable load on the cluster services. Providing a safety margin of an additional 50% of resources is recommended.

Consider the official recommendations for etcd sizing, as the stability of a MetalK8s installation depends on the stability of the backing etcd (see the etcd section for more details). Prometheus and Alertmanager also require storage, as explained in Provision Storage for Services.

Deploying with Cloud Providers¶

When installing in a virtual environment, such as AWS EC2 or OpenStack, adjust network configurations carefully: virtual environments often add a layer of security at the port level, which must be disabled or circumvented with IP-in-IP encapsulation.

Also note that Kubernetes has numerous integrations with existing cloud providers to provide easier access to proprietary features, such as load balancers. For more information, review this topic.